Stitch offers a choice of Google’s Gemini 2.5 Pro and Gemini 2.5 Flash AI models, celebrated during the main keynote for their capabilities.

“It should not only be possible but simple to create beautiful, accessible, declarative, cross-browser UI,” said Una Kravets, staff developer relations engineer for Google Chrome.

The Summarizer API, Language Detector API, Translator API, and Prompt API for Chrome Extensions are available in Chrome 138 Stable.

Gemini Code Assist for individuals, a free version of Google’s AI-coding assistant that entered public preview in February, has now entered general availability.

Google Colab, a cloud-hosted Jupyter Notebook environment for data science and Python witchcraft, is also getting an AI facelift.

At Google I/O on Tuesday, Google technical staff presented a menu of geeky treats in the hopes that software developers will pay to expand on the platforms and services offered by the Chocolate Factory.

Google CEO Sundar Pichai’s general interest keynote was followed by the developer-focused section of what is essentially a developer conference. Following his presentation of the super-corp’s achievements in the broad strokes of salesmanship, Pichai’s team of hardware and software specialists alternated speaking to the crowd gathered at the Shoreline Amphitheatre in Mountain View, the gleaming center of Silicon Valley, to demonstrate the types of applications that Google’s infrastructure and tools now enable.

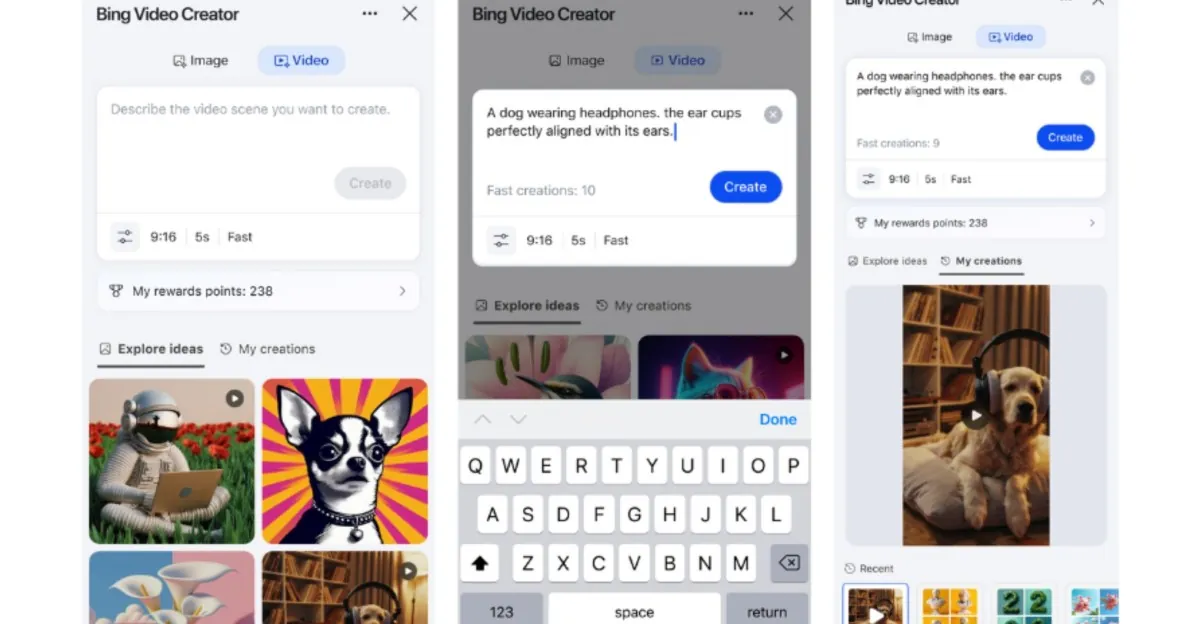

The egghead parade began with a demonstration of Stitch, an experimental AI service for designing user interfaces for mobile and web applications, by Josh Woodward, vice president of Google Labs.

“This is Stitch,” he introduced himself. “The design comes first. Therefore, you can enter a prompt like “Make me an app for discovering California, the activities, the getaways” and paste it in. In essence, all you have to do is click Generate Design. Additionally, it will begin creating a design for you. “..”.

The outcomes can be imported into Figma for additional editing or exported as CSS/HTML.

Google’s Gemini 2.5 Pro and Gemini 2.5 Flash AI models, which were praised for their capabilities during the main keynote, are available through Stitch. One of these is the ability to create AI apps that can hear and converse in 24 different languages in real time.

Jules, Google’s asynchronous coding agent, is now available to the general public. On its own Git branch, Jules can write code, fix bugs, and run tests on GitHub repos, just like the recently released GitHub Copilot coding agent. There is no need for human oversight, but the human minder—the developer, if that’s still the correct term—must submit a pull request to incorporate the changes created by the AI.

Google has incorporated the Gemini 2.5 Pro model into Android Studio. Journeys, which allows Gemini to test apps, and Version Upgrade Agent, which automatically updates dependencies to the most recent compatible versions and builds the project to identify and fix any errors that may have arisen from the dependency changes, are the two agentic capabilities that come with this.

In order to use Gemini Nano for on-device tasks, Android developers were also granted access to the ML Kit GenAI APIs.

Web developers were able to create scrolling content areas, or carousels, with just a few lines of HTML and CSS thanks to new CSS primitives.

Google reports that Pinterest tested the web technology and found that the Carousel code was reduced by 90%, from roughly 2,000 lines of code to 200.

“Creating beautiful, accessible, declarative, cross-browser user interfaces should not only be possible, but also simple,” stated Una Kravets, a Google Chrome staff developer relations engineer.

In the same vein, an Interest Invoker API has been made available as an origin trial to toggle the existence of popover menus when users express active interest in a particular area of a website. A Google demo of the Anchor Positioning and Popover APIs demonstrated how the API could be used to display the cost of a movie ticket when a user hovers over an image of the theater.

It’s impossible for LLMs to stop creating software dependencies and undermining everything.

AI can write better code if you know how to ask for it.

Yes, you can bake an LLM with personalized prompts into your app. Here’s how to do it.

The Agent Name Service is a DNS-like solution for AI agents, according to techies.

Meanwhile, Gemini is now integrated into Chrome DevTools. AI support is available to speed up debugging, performance optimization, and styling.

On Monday, Chrome 138 was released, allowing web developers who are enrolled in Google’s early preview program to test out the built-in (client-side) AI features made possible by Gemini Nano.

Chrome 138 Stable includes the Summarizer API, Language Detector API, Translator API, and Prompt API for Chrome Extensions. The Proofreader API and Prompt API with multimodal capabilities can be tested in Chrome 138 Canary, while the Writer and Rewriter APIs are accessible in origin trials.

Among the many AI-focused enhancements made to Firebase, Google’s backend-as-a-service for app developers, is the ability to import data from Figma.

Google’s AI-coding assistant, Gemini Code Assist for individuals, which was first made available to the public in February as a free preview, is now generally available. Both versions now use Gemini 2.5 Pro, and there is a premium version as well.

Additionally, Google Colab, a cloud-hosted Jupyter Notebook environment for Python witchcraft and data science, is receiving an AI makeover. With its slow rollout, Colab AI promises an agentic assistant through Gemini 2.5 Flash.

Additionally, Google’s open source Gemma model family has added a few new models to avoid being overshadowed by its proprietary Gemini models. Gemma 3n is a preview model that requires as little as 2GB of RAM, and MedGemma is a multimodal medical text and image comprehension tool. Also on the horizon are DolphinGemma, “the world’s first large language model for dolphins,” and SignGemm, a model for understanding sign language. “.”.